When a user makes a request to a website or web application, the first response from the server is an important event. Even if this event just starts the chain of element loading on the page, it confirms to the browser that your site is alive and sets the stage for content to start appearing. The metric is known as server response time, or time to first byte (TTFB). It’s a positive signal for the user experience. The faster the server starts responding, the faster your page load will be, all else being equal. Search engines likely use this as a ranking signal. For example Google has an explicit warning in their PageSpeed tool. The documentation states the warning kicks in when server response time exceeds 200 ms.

Measuring server response time

As you go through iterations you’ll want to make one change at a time and test whether your results improve. You may also try different hosting options and cloud computing providers. Many of them offer free tiers of their plans, 30 day money back, or inexpensive ways to run paid instances prorated by the hour. Simulate what you believe to be your average user: their location, the pages of your site they would most likely access, particularly popular pages with lots of content, to test a loaded page instead of a light one.

Browser method

Using a browser like Chrome here is how you can measure the server response time:

- Right click on a web page, select Inspect

- Go to the Network tab

- See the Time value that corresponds to the first item with a 200 response code

This will give you response from your current location. If you wanted to measure the response time from other locations you could use the Opera browser and enable the VPN built-in fuction. Then select the location closest to what you want. The steps, once VPN is enabled, are the same as above.

Web services method

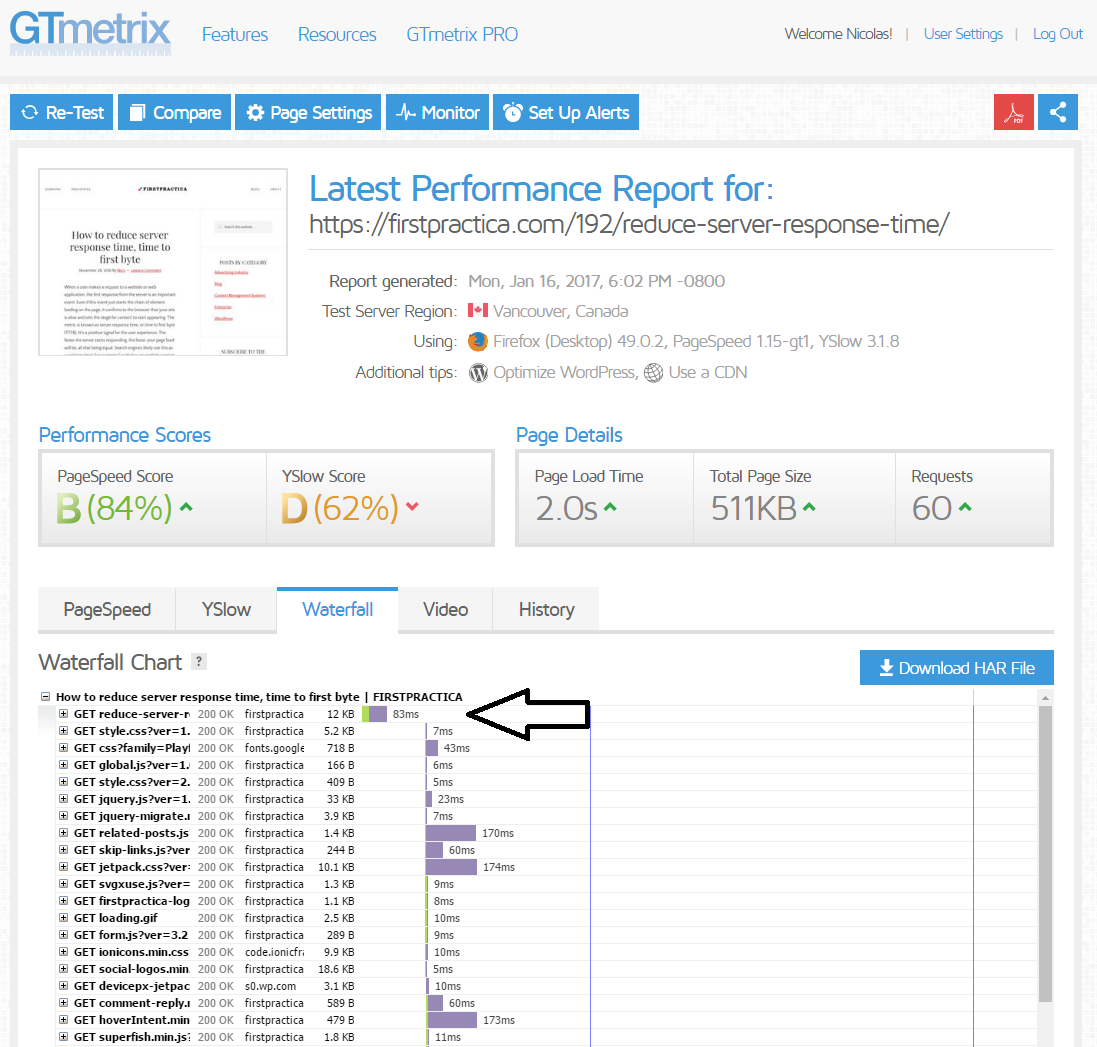

Try gtmetrix.com or other web services that offer a similar waterfall view. You can choose the place where the test is conducted. In some cases like gtmetrix you will need to create an account in order to change the location.

Our test resulted in a 83ms response time. Numbers vary widely, but it gives you an idea.

How to reduce TTFB and server response time for dynamic websites

There are many ways to accelerate your site by reducing time to first byte. They are not alternative methods, rather they can be used in combination.

Get a tier-1 server

Shared hosting platforms offer simplicity and cheap prices to get started. The tradeoff is that you are co-located with a high number of other sites on the same machine. If other sites consume these shared resources your performance goes down. The host has an incentive to increase packing density, that is, put more and more sites on one machine, to increase profit margins. When you don’t expect fast performance, whether the server takes 1 or 2 seconds to respond it eventually does. The other issue with shared hosting is that they sometimes employ a pool of shared servers behind a load balancer, placed on the front end. The load balancer checks for resource availability in the backend for each request, and that think time adds to the total latency. Nowadays tier1 servers can be found affordably. You can get your own VPS if you’re willing to manage the infrastructure, this will get you dedicated resources and perform much better.

Host your site or app near your users

Data travels very fast over the internet, but it is going to be slower than the speed of light. As of today, depending how the requests are routed it could add a few milliseconds to hundreds of milliseconds, if there are bottlenecks between the browser and the server. Check your site or app analytics. Where are the bulk of your users? Where is your target market? This is where you’ll want to host your site or app. Cloud providers now offer many locations to choose from, so picking the right place the first time will save you time later, not having to relocate your server infrastructure.

Tune your site or app for speed

Next, we are still looking at cases where requests need to get to your origin server. Even for the server to say “I’m alive”, which is enough to accomplish the server response, it needs to have immediate free cycles available to do so. Therefore, the more you can free your server from un-necessary compute tasks, the more free cycles it will have. Go for server caching like WP Super Cache if you are using WordPress, for reverse proxy caching such as Nginx (which needs a good amount of memory), Object Caching like Memcache (also memory hungry), and newer, faster language runtimes. Our server response time dropped by 50% when upgrading from PHP5.6 to PHP7, a few weeks back. Not many upgrades give such a boost. PHP7 is one of these rare, giant steps forward with generally excellent compatibility with previous versions.

Also, check for unused code that could be in the way and possibly slow your site. htaccess files can get bloated with unused code – first, make a backup before removing anything. If it breaks your site or for any other reason you want to revert back, you can use the backup copy. The same goes for your database. You want your database to just contain content that’s useful. Often, when plugins are activated, then deactivated and deleted, they leave tables with data inside the database. These tables, if never deleted, will accumulate, and you’ll end up with a bloated database that will be slow to respond to queries. Once again, make a backup before attempting any change to the database so you can safely revert if something goes wrong.

Create and validate AMP Pages

We covered AMP in other articles of this site. AMP is a game changer for server response time, given that Google created a Cache specifically for AMP, that Google hosts for you. So when a page is AMP validated, it is served from the Google Cache and does not need to hit your origin to load. Assume response time will be near zero for valid AMP pages.

Create and validate Facebook Instant Articles

The same applies to Facebook Instant Articles. Like AMP, Facebook caches your content and serves it directly to the user. It does not hit your origin server for the most part. Assume response time will be near zero for valid FBIA’s.

Add a CDN

Content Delivery Networks are distributed cache servers all over the planet. They solve many of the problems and slowness from requests having to travel long distances to your origin server, particularly for static assets. CDN services like CloudFlare include a free plan.

Reducing server response time by turning dynamic pages into static pages

Full Page Caching CDN: higher risk and tradeoffs, higher reward in performance

At the base configuration, CDNs may not help you meaningfully reduce server response time because they only cache static assets like images. Page requests still need to go to your origin if there is dynamic content that’s detected, or if the CDN is configured that way.

Some CDNs provide an option to enable Full Page Caching. In full page cache mode, the entire content is copied to a local CDN repository, and it can be served 100% from that location. We’ve seen dramatic improvements in server response times, from 200ms to <50ms sometimes <10ms, with full page caching on.

Watch out for tradeoffs however: your site needs to be fully responsive, with one CSS for desktop and mobile, or your desktop visitors may see the mobile page and vice versa. User comments and anything that’s often refreshed may not refresh as fast, given the CDN will keep and serve a static copy to users even after you update your origin. But if configured correctly, full page caching can speed up your server response like almost nothing else.

Leave a Reply